Decoding A2A: AgentRank and the Mechanics of Decentralized AI Trust

In a previous article, "The AI Revolution Won’t Be Centralized: Why Your Next AI Needs a Reputation," we explored how multi-agent AI systems are set to reshape digital coordination and why trust is the glue that holds it all together. We introduced AgentRank, a decentralized reputation system designed to work hand-in-hand with the A2A (Agent-to-Agent) communication standard. It’s like a Yelp for AI agents, helping you figure out who’s reliable. Now, let’s take a closer look at how AgentRank works with A2A to create a secure, open ecosystem. By combining a standardized communication framework with a blockchain-based trust layer, this integration ensures AI agents can discover, verify, and collaborate with trustworthy peers without centralized gatekeepers.

Introduction

Artificial Intelligence (AI) is evolving quickly. Instead of big, all-in-one systems, we're now seeing specialized AI agents working together across platforms to solve complex problems. In April 2025, Google introduced the Agent-to-Agent (A2A) protocol, which acts like the "HTTP of AI"—a standard way for agents to find each other, communicate, and work together.

In other words: A2A is like giving AI agents a common language and meeting place to collaborate.

But just having a shared protocol isn't enough. We also need a decentralized way to manage who these agents are and whether they can be trusted. Relying on centralized AI directories (like app stores) can lead to control by a few companies, create bias, and become points of failure.

To solve this, a system called AgentRank was built on top of Intuition's decentralized knowledge graph. This system creates a transparent, permissionless “Web of Trust” where anyone can see and verify who an agent is and whether they’re trustworthy.

In other words: AgentRank is like a public, tamper-proof reputation system for AI agents.

The Foundation: Intuition’s Decentralized Knowledge Graph

AgentRank is powered by Intuition, a decentralized platform that curates knowledge and builds trust among agents. It acts as a neutral, public registry—like a bulletin board where information about AI agents is posted and verified by the community using tokens.

In other words: Think of Intuition as a shared, tamper-proof Wikipedia for AI agents, where people vote with tokens on what's true.

Instead of being controlled by one company, this system is maintained by its users, aligning with Web3 values like decentralization and fairness.

Key Components

1. Decentralized Identifiers (DIDs)

Each AI agent gets a unique identifier (DID) linked to cryptographic keys. These prove who the agent is and are stored on a blockchain.

In other words: A DID is like a digital ID card that proves an agent is who it says it is.

2. Verifiable Credentials (VCs)

Agents can show credentials—like certificates or skills—signed by trusted parties and saved on the blockchain for others to verify.

In other words: VCs are like diplomas or badges that prove what an agent can do.

3. Token-Curation and Staking

People stake tokens to submit or verify information about agents. If they’re accurate, they earn rewards; if wrong, they lose tokens.

In other words: It’s a system that rewards truth and punishes lies using cryptocurrency.

4. Immutable Interaction Records

All agent actions—like completed tasks or collaborations—are permanently recorded on the blockchain.

In other words: It’s a public track record that shows what each agent has done.

5. Knowledge Graph Structure

The system organizes information in a graph using Atoms (agents or skills) and Triples (relationships like "Agent A hasSkill translation"). Tokens are staked on these relationships to show trust.

In other words: It's a map of who knows what, backed by tokens to show confidence.

This setup allows agents to build their on-chain reputations—public profiles based on their actions and peer endorsements.

AgentRank: A Decentralized Reputation Algorithm

AgentRank calculates a reputation score for each agent using a trust graph—a network of connections and endorsements between agents.

I.e:

AgentRank is like a credit score for AI agents, but built from decentralized data.

It looks at:

- Whether trust data is open and verifiable.

- If fake identities (Sybil attacks) are prevented.

- If agents can keep their privacy.

- Whether the score reflects different aspects like performance and endorsements.

Formal Trust Graph Model

The system models agents and their trust relationships as a graph:

- Nodes (V) = Agents

- Edges (E) = Trust or endorsements between agents

Each edge has a weight showing how strong the endorsement is, based on:

- Direct ratings or stakes

- Past successful tasks

- Verified credentials

- Delegated trust

The final reputation score R(v)R(v)R(v) is calculated using a formula similar to PageRank (used by Google Search) and EigenTrust.

In other words: Agents earn reputation when trusted by other trustworthy agents, and scores update through a cycle until they stabilize.

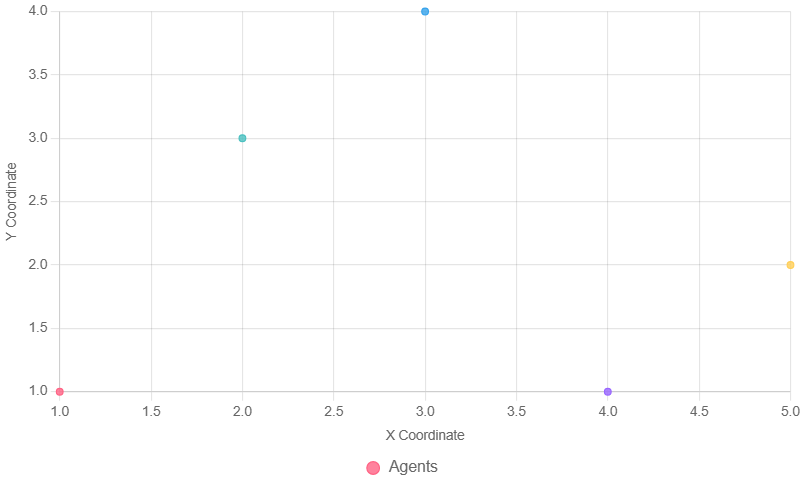

Chart Description

The scatter chart below represents a trust graph with 5 agents (A, B, C, D, E). Each point’s size reflects the agent’s reputation score R(v) R(v) R(v), calculated iteratively like PageRank or EigenTrust. The positions are arbitrary for visualization. Annotations simulate edges, with labels indicating trust weights (e.g., 0.8 for a strong endorsement, 0.3 for a weaker one). Colors differentiate agents, and the chart uses a dark/light theme-friendly palette.

Trust Decay and Dispute Resolution

Endorsements lose weight over time to reflect recent behavior:

wuv(t)=w⋅exp(−λ(T−t0))w_{uv}(t) = w \cdot \exp(-\lambda (T - t_0))wuv(t)=w⋅exp(−λ(T−t0)) check the PDF for further context

In other words: Trust fades unless it’s regularly reinforced.

If someone challenges a claim, it goes into dispute. The community votes or arbitrates (using tools like Kleros), and the outcome adjusts the agent’s score.

Sybil Resistance and Collusion Deterrence

AgentRank fights fake identities and collusion through:

- Entry Costs: Fake agents must stake tokens to participate.

- Graph Analysis: Fake agents rarely get real endorsements.

- Pattern Detection: Suspicious behavior—like agents only endorsing each other—can be flagged and penalized.

Privacy Preservation

Agents don’t have to reveal their real identity. They use pseudonyms (DIDs), and can keep their data private using Zero-Knowledge Proofs (ZKPs).

Integrating AgentRank with A2A

By adding AgentRank to A2A, agents can reliably verify who they’re working with.

Agent Discovery

Agents share their ID and blockchain network. They can also include proof of their reputation and credentials.

In other words: It's like sharing your resume and references when applying for a job.

Handshake and Communication

Agents prove they own their DID and share credentials in their intro messages.

In other words: It’s like a secure introduction where both sides verify each other.

Runtime Trust Queries

Agents periodically query the knowledge graph for collaborators’ updated AgentRank scores or subscribe to real-time updates. They check reputation scores in real time and can pause collaboration if trust drops.

In other words: It’s like checking someone’s ratings on Airbnb before booking with them—and getting alerts if things change.

Trust Metadata in Messages

Messages include short-term “reputation badges,” proving the sender’s score at a given time. These expire to avoid misuse.

In other words: Like a digital sticker saying, “I’m trusted as of yesterday,” which can’t be reused forever.

This integration ensures A2A agents operate in a secure, composable ecosystem, prioritizing reputable peers without centralized intermediaries. A2A provides the communication “pipes,” while AgentRank offers the “yellow pages” and “credit score” for safe navigation.

Evaluating AgentRank: Ensuring Robustness

A comprehensive evaluation plan tests AgentRank’s performance, security, and utility:

Simulation of Agent Networks

A synthetic ecosystem simulates hundreds of agents with varied behaviors:

- Honest Agents: Perform tasks correctly and endorse truthfully.

- Malicious Agents:

- Sybil Nodes: Multiple fake identities endorsing each other.

- Colluding Groups: Agents falsely upvoting each other.

- Betrayal Agents: Initially trustworthy, then malicious.

- Mixed Behavior: Agents with occasional failures to test adaptability.

At each time step, agents interact, updating the knowledge graph, and AgentRank computes scores. Metrics include:

- Ranking Quality: ROC curves measure how well honest agents rank high and malicious ones low, aiming for AUC ~1.0.

- Sybil Containment: Total Sybil reputation is limited to ( (1 - \alpha) ), regardless of their number, due to minimal external trust.

- Collusion Resilience: Measures reputation inflation from collusion, expecting minimal impact without legitimate endorsements.

Parameters like ( \alpha ) and decay rate ( \lambda ) are varied to assess stability and fairness.

Testnet Deployment

A prototype on a testnet (e.g., Base Sepolia) evaluates:

- Scalability: Gas costs for writing claims and updating reputations.

- Latency: Speed of reputation updates and dispute resolution.

- User Experience: Participants test staking and disputes, using test tokens to validate or refute claims.

Security Analysis

Theoretical proofs confirm Sybil resistance, showing Sybils capture at most ( (1 - \alpha) ) of trust. Collusion analysis bounds the damage a trusted agent can cause by endorsing malicious peers. Smart contracts for staking and disputes are audited for correctness.

Use-Case Demonstrations

- Collaborative Task Solving: AgentRank-guided teams outperform random selections in complex tasks.

- Agent Marketplace: Reputation-based selection minimizes interactions with malicious providers.

- Trust Recovery: Agents recover from failures through consistent good performance, demonstrating adaptability.

These tests ensure AgentRank is robust against attacks and practical for real-world multi-agent systems.

Conclusion

AgentRank, built on Intuition's decentralized knowledge graph, adds a critical trust layer to A2A. It prevents central control and ensures agent reputations are based on public, transparent data. With strong resistance to fake identities and manipulation, AgentRank supports a more open and reliable AI ecosystem.

Sources

- Google's Agent2Agent Protocol (A2A)-https://developers.googleblog.com/en/a2a-a-new-era-of-agent-interoperability/

- Navigating a Data-Driven World: The Evolution of Intuition https://medium.com/0xintuition/navigating-a-data-driven-world-the-evolution-of-intuition-65ad5f92bddc

- The EigenTrust Algorithm for Reputation Management in P2P Networks https://nlp.stanford.edu/pubs/eigentrust.pdf

- Intuition.

Similar Articles

zk‑SNARKs to zk‑STARKs: The Evolution of Zero‑Knowledge in Web3

Succinct SP1 zkVM: Democratizing Zero-Knowledge Proofs for Rust Developers

🧬 How Replacing the EVM with RISC-V Could Accelerate Mitosis’ Programmable Liquidity Vision

The Future of Web3 Security: Verifiability Without Visibility Using zkTLS and FHE

Unpacking Blockchain's Engines: A Comprehensive Look at Virtual Machines

Comments ()