Navigating IONET: The Roles of Devices in GPU Optimization on IONET

Introduction

In an era where artificial intelligence (AI) and machine learning (ML) are reshaping industries, the demand for computational power, particularly Graphics Processing Units (GPUs), has skyrocketed. However, the global supply of GPUs struggles to keep pace, creating bottlenecks for researchers, startups, and enterprises. Enter IONET, a decentralized physical infrastructure network (DePIN) that is revolutionizing access to GPU resources. By aggregating underutilized GPUs from diverse sources, IONET offers a cost-effective, scalable, and accessible solution to the global compute shortage.

This article explores IONET’s innovative approach, delving into its core functionality, its role in addressing computational resource shortages, and the pivotal role devices play in optimizing GPU performance on its platform.

What is IONET?

IONET is a decentralized GPU network designed to democratize access to high-performance computing for AI and ML applications. Built on blockchain technology, primarily leveraging the Solana blockchain for its high-speed and low-cost transactions, IONET aggregates underutilized GPU resources from independent data centers, cryptocurrency miners, and individual consumer devices into a global, permissionless marketplace. This decentralized approach contrasts with traditional cloud providers like Amazon Web Services (AWS), Microsoft Azure, and Google Cloud, which often face supply constraints, long wait times, and high costs.

At its core, IONET operates as a DePIN, a decentralized physical infrastructure network that uses token incentives to coordinate and monetize hardware resources. By creating a marketplace where GPU suppliers can rent out idle hardware and users can access affordable compute power, IONET addresses the inefficiencies of centralized cloud computing. The platform’s native token, $IO, facilitates transactions, incentivizes participation, and supports network security through staking mechanisms. IONET’s mission is to make compute power a ubiquitous resource, akin to a digital currency, powering innovation across industries like AI, finance, and more.

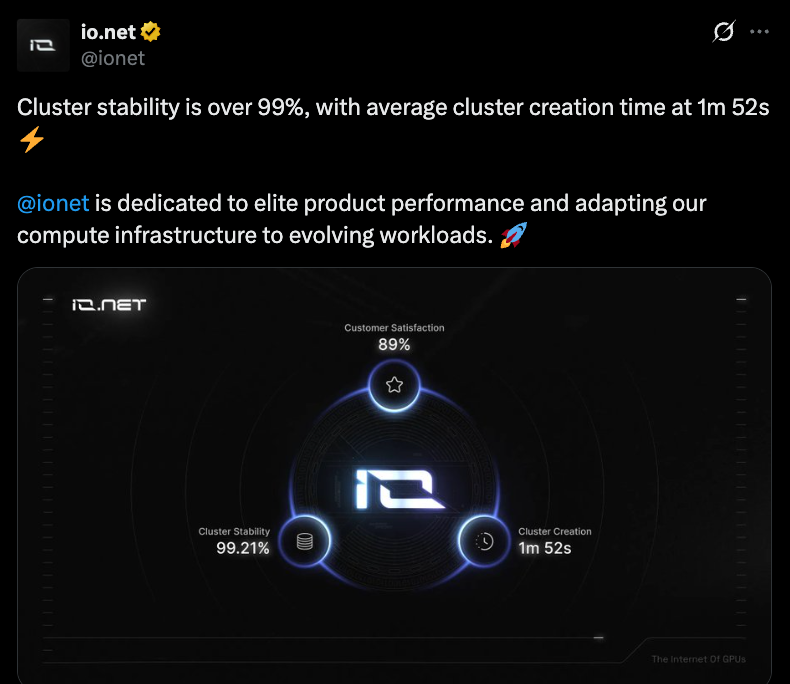

IONET’s platform is built on open-source frameworks like Ray, which is used by industry leaders such as OpenAI for training advanced AI models like GPT-3 and GPT-4. This integration allows IONET to offer seamless orchestration, scheduling, and fault tolerance for distributed computing tasks, making it a powerful tool for engineers and developers. By clustering GPUs from geographically diverse sources, IONET ensures scalability, resilience, and cost-efficiency, enabling users to deploy massive GPU clusters in under 90 seconds.

Addressing Shortages of Computer Resources

The global demand for GPUs has surged due to the rapid growth of AI and ML workloads, with projections estimating a 2-3x shortfall in cloud GPU capacity compared to current global supply. This scarcity is exacerbated by production constraints on advanced chips like NVIDIA’s A100 and H100, limited manufacturing capacity, and high costs associated with centralized cloud providers. For startups and smaller enterprises, these barriers long wait times, inflexible contracts, and prohibitive costs hinder innovation and scalability.

IONET tackles these challenges by tapping into underutilized GPU resources. Independent data centers, which often operate at only 12-18% utilization, cryptocurrency mining farms transitioning from proof-of-work to alternative revenue streams, and consumer-grade GPUs sitting idle in personal devices all represent untapped potential. IONET aggregates these resources into a decentralized supercloud, offering up to 90% cost savings compared to traditional providers like AWS. For instance, IONET’s platform is reportedly 70% cheaper than AWS and can launch clusters in just two minutes, a significant improvement over the weeks or months required by centralized providers.

This decentralized model not only addresses supply shortages but also enhances accessibility. By connecting GPU suppliers directly with users, IONET eliminates traditional gatekeepers, reducing costs and enabling rapid scaling. The platform’s partnerships with projects like Filecoin, Render Network, and Aethir further expand its resource pool, with over 600,000 GPUs across 139 countries as of mid-2024. These collaborations allow IONET to integrate diverse hardware, from consumer-grade NVIDIA RTX 4090s to enterprise-grade H100s, creating a flexible and robust compute ecosystem.

Moreover, IONET’s approach promotes sustainability by repurposing idle hardware, reducing the need for new GPU production and minimizing environmental impact. By enabling dynamic scaling where users pay only for the resources they use IONET ensures cost-efficiency and flexibility, making high-performance computing accessible to a broader range of users, from individual researchers to large enterprises.

IONET Products

IONET offers a suite of products designed to streamline access to and management of decentralized GPU resources, tailored to the needs of both users and suppliers. These products are engineered for efficiency, scalability, and integration with modern AI/ML workflows. Below are the key offerings:

IO Cloud

IO Cloud is IONET’s flagship product, providing an intuitive interface for deploying decentralized GPU clusters. Users can specify hardware types (e.g., NVIDIA A100, RTX 4090, or Apple M2 Ultra), geographic locations, and security compliance requirements. The platform supports integration with popular AI frameworks like TensorFlow, PyTorch, and Ray, enabling seamless execution of tasks such as distributed training, hyperparameter tuning, reinforcement learning, and model inference. IO Cloud’s ability to spin up clusters in under 90 seconds makes it ideal for compute-intensive workloads, offering up to 90% cost savings compared to traditional cloud providers.

IO Worker

IO Worker is designed for GPU suppliers, allowing them to connect their devices to the IONET network and monetize idle resources. Suppliers can monitor real-time performance, manage devices, and optimize resource usage through a transparent dashboard. The platform supports a variety of GPUs, from consumer-grade to enterprise-grade, and even CPUs like Apple’s M1-M3 and AMD’s Ryzen series. Payments to suppliers are made in $IO tokens or USDC, with no transaction fees for $IO payments, incentivizing participation and maximizing returns.

BC8.AI

BC8.AI is a specialized product for AI-generated content and model inference, built on IONET’s decentralized infrastructure. It leverages the network’s GPU clusters to power applications like image diffusion models (e.g., Stable Diffusion) and other AI-driven tasks. By running inferences closer to end-users, BC8.AI reduces latency and enhances performance, making it a valuable tool for developers in creative industries.

These products collectively form a robust ecosystem that supports diverse use cases, from scientific simulations to cloud gaming, while addressing the core challenges of cost, scalability, and accessibility in GPU computing.

The Role of Devices in GPU Optimization on IONET

Devices are the backbone of IONET’s decentralized network, enabling intelligent workload distribution and scalable compute power. By leveraging a diverse range of hardware from consumer PCs to enterprise-grade data center GPUs IONET optimizes GPU performance through advanced orchestration and virtualization technologies. Below, we explore how devices contribute to GPU optimization on the platform.

Hardware Profiling and Resource Allocation

IONET employs a lightweight agent that runs on each connected device, logging specifications such as GPU model, memory capacity, and availability. For example, a consumer-grade NVIDIA RTX 3070 with 8GB VRAM might be assigned to lightweight tasks like inference, while a high-end A100 with 80GB VRAM is reserved for heavy-duty training workloads. This profiling ensures that tasks are matched to the most suitable hardware, maximizing efficiency and minimizing resource waste.

The platform’s orchestration layer, built on frameworks like Ray and Kubernetes, intelligently distributes workloads across devices based on real-time demand and hardware capabilities. This dynamic allocation allows IONET to handle complex tasks like parallel hyperparameter tuning and reinforcement learning, where multiple GPUs work in synergy to process large datasets or models. By optimizing resource allocation, IONET reduces latency and ensures high performance, even across geographically distributed devices.

Decentralized Clustering

Unlike traditional cloud providers that rely on centralized data centers, IONET’s decentralized architecture clusters GPUs from diverse sources into virtual compute pools. This clustering capability, pioneered by IONET’s founder Ahmad Shadid, allows the platform to create scalable, resilient clusters in minutes. For instance, during a live demo at the Solana Breakpoint 2023 conference, IONET showcased its ability to deploy a cluster of GPUs from different global locations, highlighting its speed and flexibility.

Devices play a critical role in this process by contributing their compute power to the network. A motion graphics designer’s RTX 4090, a crypto miner’s idle A100, or a data center’s underutilized H100 can all be seamlessly integrated into a single cluster. This distributed approach eliminates single points of failure, enhances resilience, and allows IONET to scale resources up or down based on demand.

Monetization and Incentive Mechanisms

IONET incentivizes device owners to contribute their GPUs through token-based rewards. Suppliers earn $IO tokens for providing compute power, with hourly rewards following a disinflationary model starting at 8% annually. The platform’s transparent dashboard provides real-time metrics on GPU usage, job runtimes, costs, and payments, empowering suppliers to optimize their contributions. Additionally, staking $IO tokens increases a node’s priority and rewards, further encouraging participation.

This incentive structure ensures a steady supply of devices, which is crucial for maintaining network scalability and performance. By monetizing idle hardware, IONET not only addresses GPU shortages but also creates new revenue streams for suppliers, from individual gamers to large data centers.

Security and Reliability

Security is a priority for IONET, especially given the distributed nature of its network. Devices are protected by multiple permission layers, ensuring that malicious users cannot access underlying hardware. A 2024 incident highlighted IONET’s robust security measures, where attackers could alter device metadata but were unable to compromise actual GPU resources. The platform’s Proof of Work mechanism and continuous security patches further safeguard devices, ensuring reliable and secure operation.

Real-World Impact

The role of devices in GPU optimization extends beyond technical efficiency to real-world impact. For example, Krea.ai leverages IONET’s IO Cloud to power AI model inferences, while partnerships with projects like NavyAI, Synesis One, and LeonardoAI demonstrate the platform’s versatility. By enabling devices to contribute to a global compute network.

IONET empowers developers and businesses to tackle compute-intensive tasks at a fraction of the cost, driving innovation across industries.

Challenges and Future Outlook

While IONET’s decentralized model offers significant advantages, it faces challenges in scalability, regulatory compliance, and interoperability. Ensuring consistent performance across a heterogeneous network of devices requires ongoing optimization of orchestration and virtualization technologies. Navigating global regulatory frameworks for decentralized computing and cryptocurrency transactions is another hurdle, as is establishing standards for seamless integration with existing AI frameworks.

Looking ahead, IONET aims to onboard a million GPUs, further expanding its network and solidifying its position as the world’s largest decentralized AI compute cloud. Partnerships with industry leaders and continued investment in open-source frameworks like Ray will drive adoption. The platform’s focus on sustainability, affordability, and accessibility positions it to disrupt the centralized cloud computing market, making GPU resources a universal asset for innovation.

Conclusion

IONET is redefining the landscape of high-performance computing by leveraging decentralized GPU resources to address global shortages and democratize access to compute power. Through its innovative products: IO Cloud, IO Worker, and BC8.AI—IONET provides a scalable, cost-effective, and resilient alternative to traditional cloud providers. Devices, from consumer PCs to enterprise-grade GPUs, are the cornerstone of this ecosystem, enabling intelligent workload distribution, decentralized clustering, and secure, incentivized participation. As IONET continues to grow, it stands poised to transform AI and ML development, making computational power a ubiquitous resource for the next generation of technological breakthroughs.

Reference

Mitosis

Comments ()