Projects within the Mitosis ecosystem - Part 1

I’ve decided to kick off a review series on the core projects and tools in the Mitosis ecosystem

While I’m learning myself, I want to gather and organize information as I go - and share it with you, so we can all get a clearer picture together of how cross-chain liquidity works, and how DeFi and crypto landscapes fit together.

My main goal is to explain things in a simple, accessible way. I’m still on the learning curve, so I might slip up sometimes - please do correct me in the comments, and share any questions or thoughts you have.

I’d genuinely love any feedback or ideas to make these reviews even more helpful and engaging. Let’s learn together!

Stork Oracle (Infrastructure) - bases and role in the ecosystem Mitosis

Often, ecosystem tools don’t get their due credit, even though they’re strategically crucial. One such tool in the Mitosis ecosystem is the Stork Oracle.

In a nutshell: oracles are bridges between smart contracts and the outside world - bringing in real, verified info like asset prices or event outcomes. Without them, blockchains would be like sealed off vaults - unable to see what’s happening beyond their own walls.

For example, Mitosis by itself can’t “know” if a user did something in another network - say, deposited tokens into an Ethereum contract, or what ETH is trading at on the market momentarily. To grab that real-world, accurate data on prices, actions, or events, you need an external trusted source - and in Mitosis, that source is Stork. It pulls in data from multiple exchanges and sources, then feeds it into the network with tight accuracy and minimal delay.

Most oracles fall into one of two models:

- Push model: They send data to the blockchain on a set schedule (like every minute).

- Pull model (that’s Stork): Data only moves when needed. It’s faster (less delay), cheaper (lower gas), and more flexible (not tied to a provider’s schedule).

Many oracles operate with the push approach, meaning they “push” data regularly - every minute, every 10 minutes, or even more often. Chainlink, for instance, pushes updates ahead of time and stores them on-chain - which often means a 2-5 minute lag.

Drawbacks:

- Data becomes stale fast

- Constant pushes = gas costs pile up

- No flexibility: you’re stuck with provider timing and rules

Stork implements a push-model oracle where validators send up-to-date price data on-chain every time there is a change, for example on centralized exchanges like Binance or Coinbase. This model provides minimal update latency (down to 500 ms) and high accuracy, especially during volatile market conditions.

Stork covers over 370 assets across more than 60 networks, making it a universal solution for multichain protocols. Data updates occur either when the price changes by 0.1% or on a timer every half second, which is critical for high-frequency DeFi strategies and derivatives.

Technically, the data is transmitted using digital signatures based on secp256k1 - the same cryptographic curve used in Ethereum. This allows data to be integrated directly into the same transaction where it is used, without needing prior publication or an external call.

In summary:

- Minimal latency - data is always fresh, delivered in real time.

- Gas optimization - no need to regularly update data on-chain "just in case."

- Flexibility - any user or contract can initiate a request without waiting for a schedule.

- Easy integration - data arrives in the same transaction where it is used, with no extra infrastructure layer.

It’s important to understand that oracles are never universal - their architecture, trust model, and update frequency are chosen to fit specific scenarios. There are centralized solutions, decentralized validator networks, and specialized feeds (for options, NFTs, weather data, etc.). Therefore, the question “which oracle is best” always depends on the context of use.

In the case of Mitosis, where low latency, flexible liquidity routing, and modular architecture are critical, Stork is the optimal choice. Thanks to its push model, high update frequency, and direct contract integration, it provides the reliability and speed needed to build programmable liquidity in real time.

Interaction between Mitosis and Stork

Stork does not manage assets or make decisions, but serves as a reliable intermediary that delivers trusted data between different blockchains - like a postman delivering an important letter.

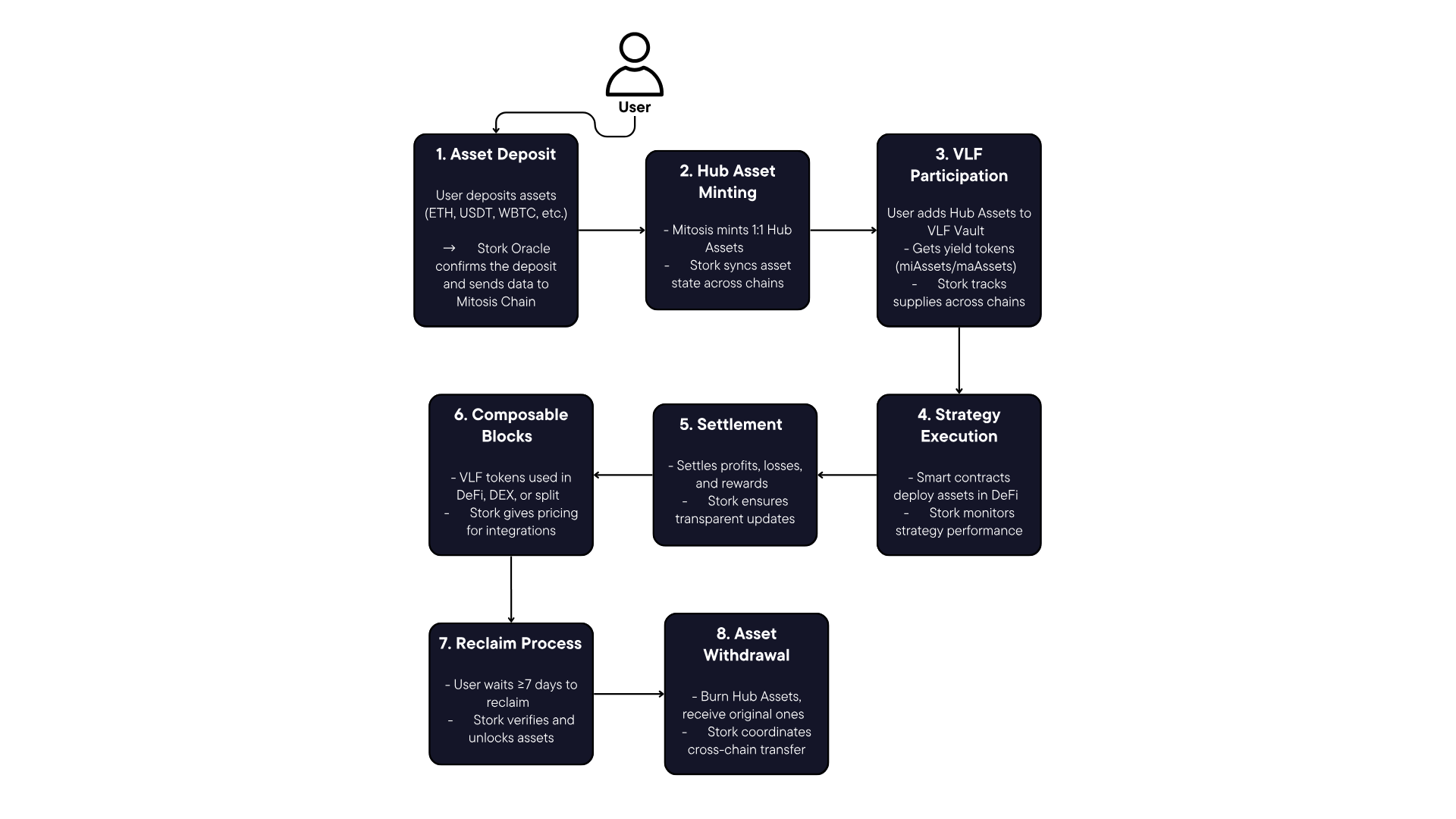

If we take the diagram from my post about programmable liquidity and add Stork’s influence, it looks something like this ↓

Below is a simplified chain of main programmable liquidity actions involving Stork:

1. Asset Deposit

💧The user deposits assets (ETH, USDT, WBTC, etc.) into the Mitosis Vault on supported branch chains (Ethereum, Arbitrum, Base, Morph, etc.)

→ 🔸Stork Oracle confirms the deposit and sends data to Mitosis Chain

↓

2. Hub Asset Minting

💧Asset Manager on Mitosis Chain receives the deposit message and mints Hub Assets at a 1:1 ratio

→ Hub Assets remain safe and are never used for VLF without explicit user consent

→ 🔸Stork Oracle synchronizes asset state across networks

↓

3. VLF Participation

💧The user voluntarily supplies Hub Assets to VLF Vaults (ERC-4626 compatible)

→ Receives VLF tokens (miAssets/maAssets) - yield-bearing tokens

→ 🔸Stork Oracle tracks supplies and sends data to branch chains

↓

4. Strategy Execution

💧Strategist (smart contract) deploys assets in DeFi strategies on branch chains:

→ Lending (Compound, Aave), AMM (Uniswap), Yield Farming

→ Uses Merkle Proof verification for security

→ 🔸Stork Oracle monitors strategy performance in real time

↓

5. Settlement System

💧Periodic settlements through three types of settlement:

→ Yield Settlement: Minting Hub Assets on profit

→ Loss Settlement: Burning Hub Assets on loss

→ Extra Rewards Settlement: Distributing reward tokens

→ 🔸Stork Oracle ensures accuracy and transparency of settlements

↓

6. Composable Building Blocks

💧VLF tokens function as programmable building blocks:

→ Collateral in other DeFi protocols

→ Liquidity on DEX and trading platforms

→ Decomposition into principal and yield

→ Combining to create complex financial instruments

→ Integration into Mitosis ecosystem for custom strategies

→ 🔸Stork Oracle provides transparent pricing for all integrations

↓

7. Reclaim Process

💧ReclaimQueue with minimum waiting period (≥7 days):

→ Reclaim request → VLF tokens moved to queue

→ Liquidity reserved by Strategist + waiting period

→ Claim execution → Hub Assets returned

→ 🔸Stork Oracle verifies asset availability and processes reclaim

↓

8. Asset Withdrawal

💧User can withdraw Hub Assets back to original assets:

→ Burning Hub Assets on Mitosis Chain

→ Cross-chain messaging in Mitosis Vault

→ Asset transfer to user on selected branch chain

→ 🔸Stork Oracle coordinates safe cross-chain transfer

- Collects data from branch chains: deposits, strategies, yield.

- Transfers it to the hub (Mitosis Chain) and vice versa.

- Ensures trust between networks without relying on a centralized bridge.

- Participates in finalizing all key events: deposits, strategies, withdrawals.

Stork with Mitosis shows how a high-frequency oracle can be embedded into cross-chain liquidity management infrastructure. Mitosis is a protocol that turns DeFi positions into programmable components via VLF strategies, and it needs to track strategy performance and asset state across networks in real time to safely handle settlement processes and liquidity allocation. Stork integration allows Mitosis to instantly get objective yield data and use it in yield/loss settlement calculations. This is especially important when syncing VLF strategies between branch chains and Mitosis Chain - any delay or manipulation of data can lead to incorrect settlements or user fraud. Stork serves as an anti-crisis mechanism here: it delivers data faster than anyone else, reducing the window for possible attacks like frontrunning or oracle manipulation.

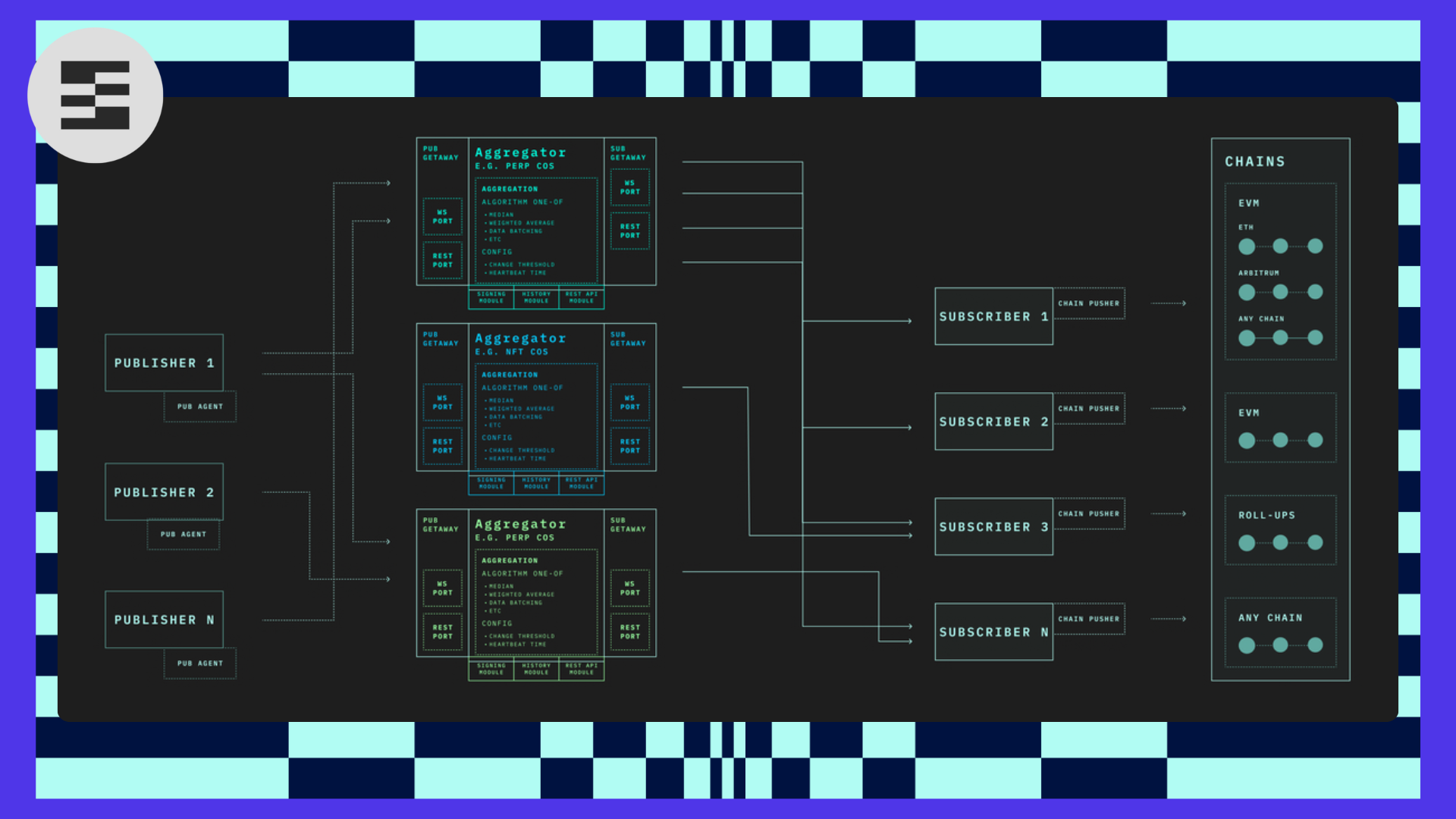

Stork architecture summary from documentation

Stork is built on the principles of modularity and transparency and consists of four key layers:

Publishers → Aggregators → Subscribers → On-Chain Contracts

Each of these layers performs a clear function, and the architecture as a whole ensures reliable delivery of data to the blockchain.

1. Publishers (Data Providers)

This is the external layer - independent data sources.

Examples: centralized exchanges, HFT companies, market data providers, etc.

They publish data in the TNV format (see below), signing each update with their ECDSA signature, and transmit it to the Stork network via WebSocket.

- What is important: each publisher cryptographically confirms that the data really comes from them - this excludes spoofing or manipulation.

2. Aggregators

This is the "brain" of the whole system. Aggregators:

- receive TNV updates from different publishers;

- verify the correctness and validity of all signatures;

- aggregate data using specified methods - median, average, weighted average, etc.;

- sign the result, including the aggregation method and the list of source signatures.

- Updates occur every 500 ms or when the value changes by more than 0.1%. This keeps the data as fresh as possible without extra network load.

- The aggregator's final signature is proof that the data was formed honestly, transparently, and reproducibly.

3. Subscribers

Subscribers are the end consumers of the data:

smart contracts, dApps, DeFi protocols, trading bots, analytical platforms, etc.

There are two ways subscribers operate:

A. Chain Pusher (ready-made solution from Stork)

- Sends TNV updates to the blockchain automatically when the data changes significantly. Suitable for standard scenarios where frequency and stability are important.

B. Integration into dApp

- A more flexible method: data is sent on-chain within the user transaction. This means the dApp can immediately use the data without intermediate logic.

- This approach is especially important for Mitosis — here each system module can choose which data it needs and manage sending based on its own logic.

4. On-Chain Contracts

The final layer — contracts that store data on-chain. They:

- verify aggregator signatures;

- check data freshness;

- make data available to other contracts.

- If data is outdated or the signature is invalid, the update is rejected.

Thus, no unreliable or outdated information can enter the blockchain.

What is TNV and why it is not just a "price"

All data in Stork is transmitted as TNV (Temporal Numeric Value):

timestamp + numeric value

At first glance it seems simple. But this is the whole power.

TNV allows transmitting not only market prices but any numeric parameter at a given moment:

- price of ETH, BTC, or LP token;

- number of user steps per day;

- TVL level in a protocol;

- air temperature;

- social activity or engagement;

- event probability;

- even smart contract activity.

- The main condition is high precision (up to 18 decimal places) and a valid timestamp.

What TNV gives to systems like Mitosis

Mitosis is a modular system. And each of its modules can use different data sources. Thanks to TNV:

- data becomes universal and applicable to any type of logic;

- Mitosis modules can use custom feeds, for example, data about staking, trading activity, user engagement;

- it is possible to build non-standard DeFi strategies based not only on price but any numeric parameters;

- each module can subscribe only to the feeds it really needs — no "universal" data.

Stork turns into a universal data bus for any architecture — from a simple AMM to a complex cross-chain model like Mitosis.

Cryptography and verifiability at all levels

Stork uses ECDSA (secp256k1) — the same cryptography that underlies Ethereum.

Signatures are applied:

1. At the Publisher level

- each data source signs its TNV updates with an EVM-compatible signature. This confirms that the data is indeed from them and has not been changed.

2. At the Aggregator level

- the aggregator collects signatures from all publishers and forms its final signature — already on the aggregated value, indicating sources, aggregation method, and timestamp.

3. When sending to On-Chain contract

- the smart contract verifies:

- aggregator signature;

- aggregator permissions;

- timestamp freshness.

If at least one check fails — the data is rejected.

- The subscriber can verify all signatures off-chain, which makes the system ideal for off-chain analytics and high-frequency trading.

Modularity, scalability and extensibility

- Each Aggregator can be individually configured for the application.

- Scalability does not depend on the number of assets.

- Through TNV it is possible to digitize any data — from sports results to business metrics.

One of the most frequent concerns when working with oracles is scalability. What if the number of assets becomes not 10 but 1000? Or if data must be updated every 300 ms, not every 10 minutes?

Stork scales horizontally

Thanks to its architecture, Stork does not hit a single bottleneck. Aggregators can be scaled horizontally — each aggregator serves only its own subset of feeds, and adding new ones does not overload the system. Want to update 200 assets in 10 different networks at once? No problem — you can distribute tasks among aggregators and publishers.

Latency does not grow with volume

This is key. Even if the system has thousands of assets, updating a specific feed does not become slower.

Each feed is processed independently

and update frequency can remain sub-second (for example, every 500 ms or at a 0.1% price change).

Such architecture makes Stork not just an oracle but a specialized system for transmitting verifiable performance data of DeFi strategies, ideally suited for complex cross-chain systems like Mitosis.

Stork ensures cryptographically secure synchronization of VLF state between branch chains and Mitosis Chain, which is critically important for accurate settlement and protection from manipulation.

For more detailed information, check out:

- Stork Documentatio👉 https://docs.stork.network/introduction/how-it-works

- Mitosis Documentation 👉 https://docs.mitosis.org/learn/introduction/what-is-mitosis

- Mitosis Twitter 👉 https://x.com/MitosisOrg

- Stork Twitter 👉 https://x.com/StorkOracle

- Official Website Mitosis👉 https://mitosis.org/

Follow the author on Twitter 👉 https://x.com/mEden2452

Comments ()